EMOTION JOURNAL APP

_These screens were partly designed with the help of AI tools: Lovable

This project aims to help people track their emotions and the somatic sensations connected to them.

Psychological Idea

CBT/DBT

Try the app here︎︎︎

(please open it on mobile)

Step 1

Design Mockups in Figma

Step 2

Upload to Lovable + Prompt

Purpose:

A simple, intuitive app focused solely on helping users log, understand, and reflect on their emotions.

A simple, intuitive app focused solely on helping users log, understand, and reflect on their emotions.

📱 App Screens & Functionality

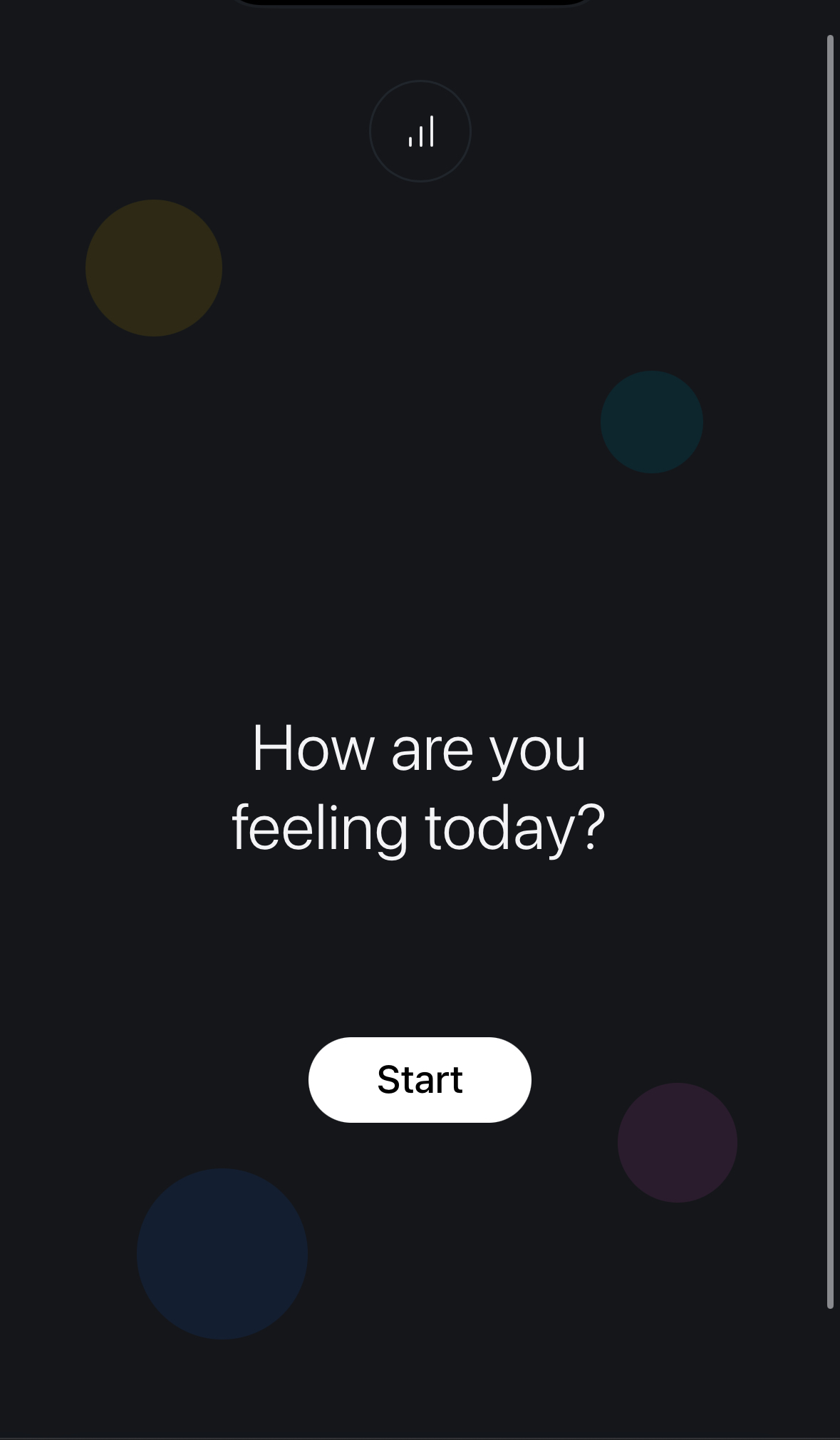

1. Welcome Screen

- A warm, inviting screen to introduce the user to the app.

- Visual style and layout aligned with the design in the provided screenshot.

- Purpose: Onboarding and setting the tone for calm emotional reflection.

2. Emotion Selection Screen

- Users choose from a range of emotions displayed as chips.

- Emotions are categorized based on the Emotion Wheel.

- Each emotional category is color-coded (e.g. all anxiety-related emotions are blue, anger-related ones might be red, etc.).

- Multi-selection possible.

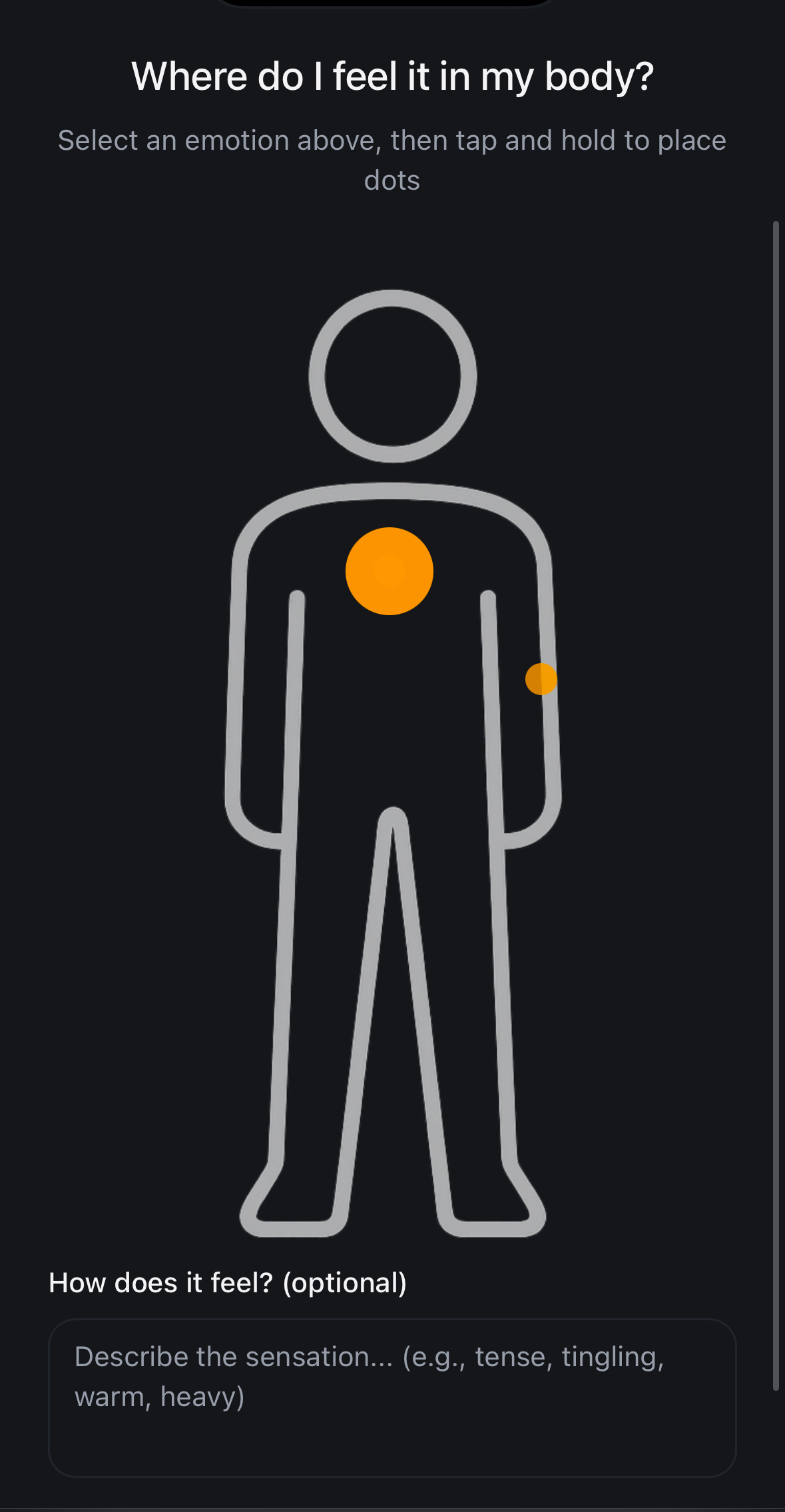

3. Body Sensation Mapping Screen

- Displays a gender-neutral body outline.

- Headline: "Where do you feel the emotion in your body?"

- Users can tap on the parts of the body where the emotion is physically felt.

- Each tap places a transparent, colored dot on that spot.

- The longer the user taps, the larger the dot becomes (indicating intensity).

- A text field allows users to describe the bodily sensation in that spot (e.g. “tight”, “tingling”, “burning”).

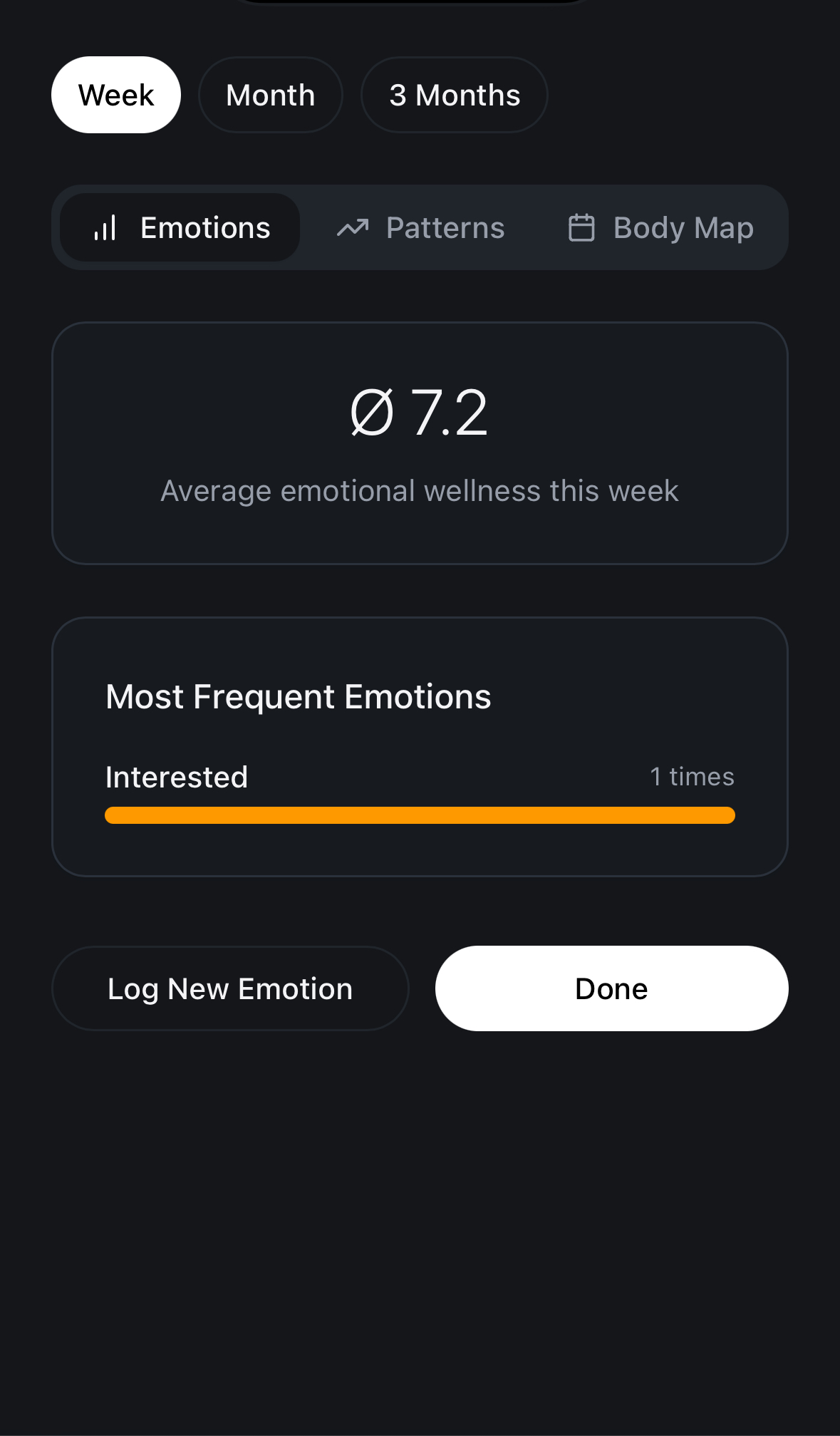

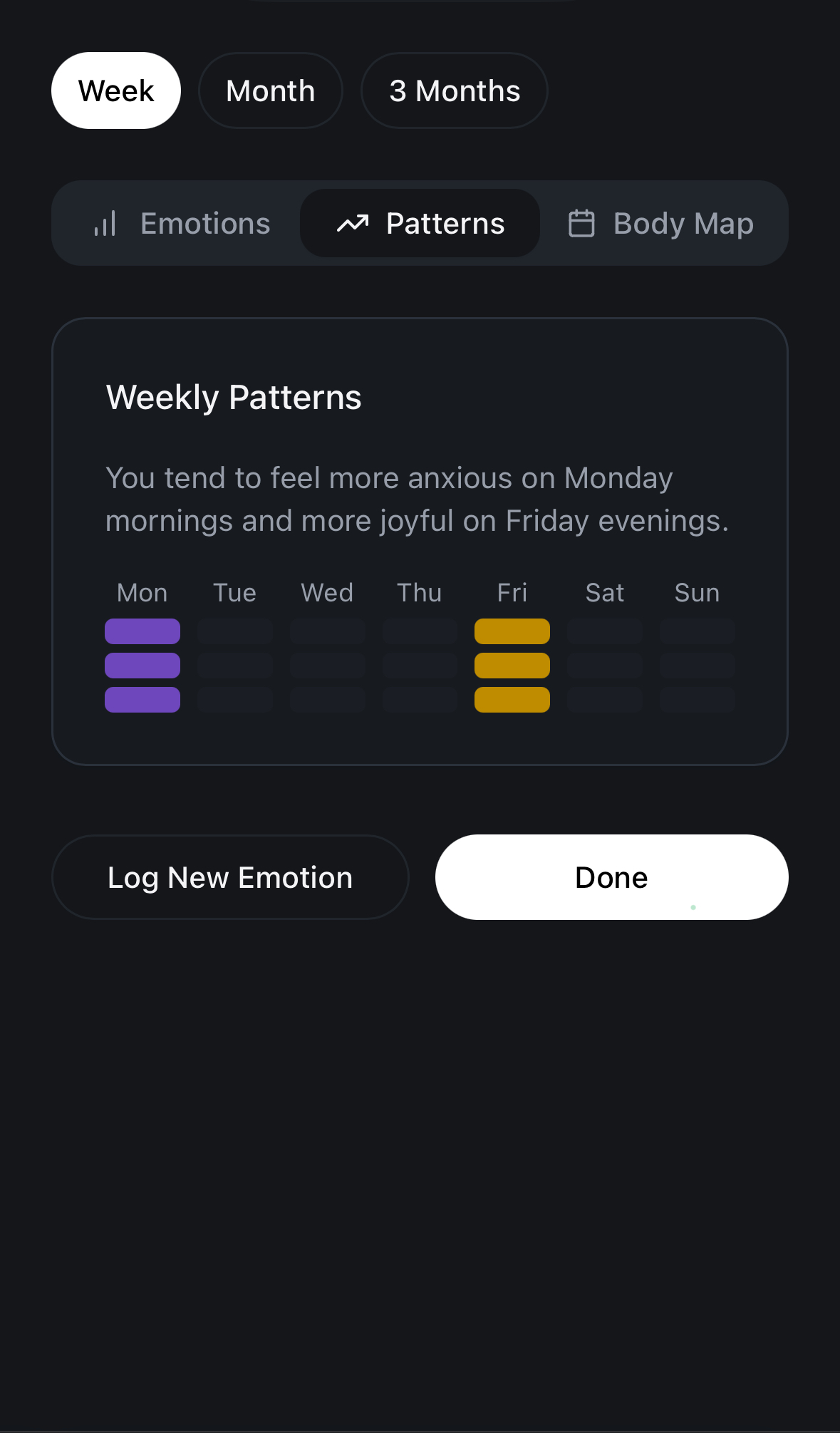

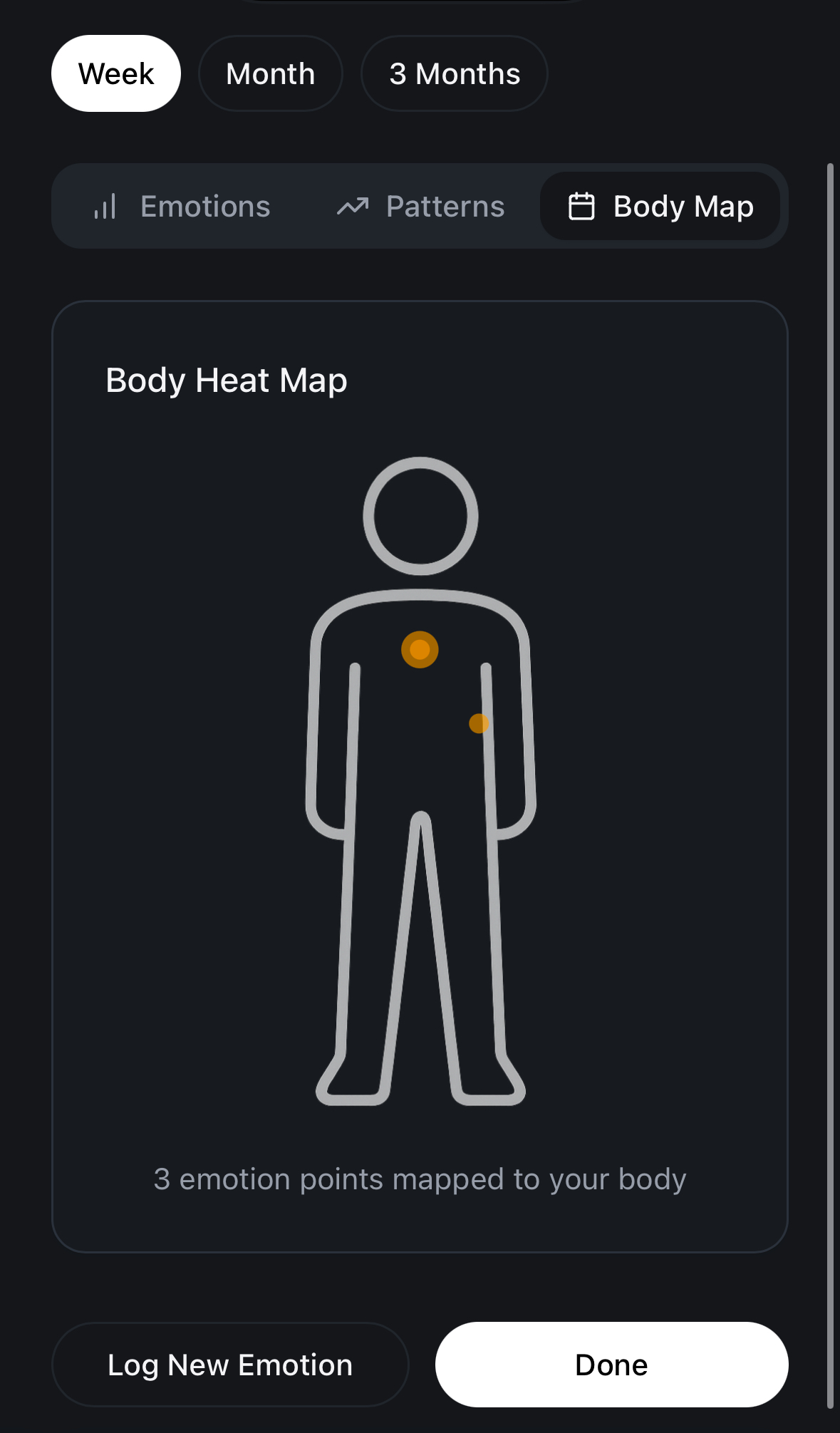

4. Emotion Statistics & Trends Screen

- Shows analytics and trends over time.

- Key features:

- Emotion timeline showing which emotions were logged when.

- Heatmap body image summarizing where emotions are felt most over a selected period.

- Emotion frequency graphs over time (bar, line, pie, or scatter plots).

- Filters to select time ranges and emotion types.

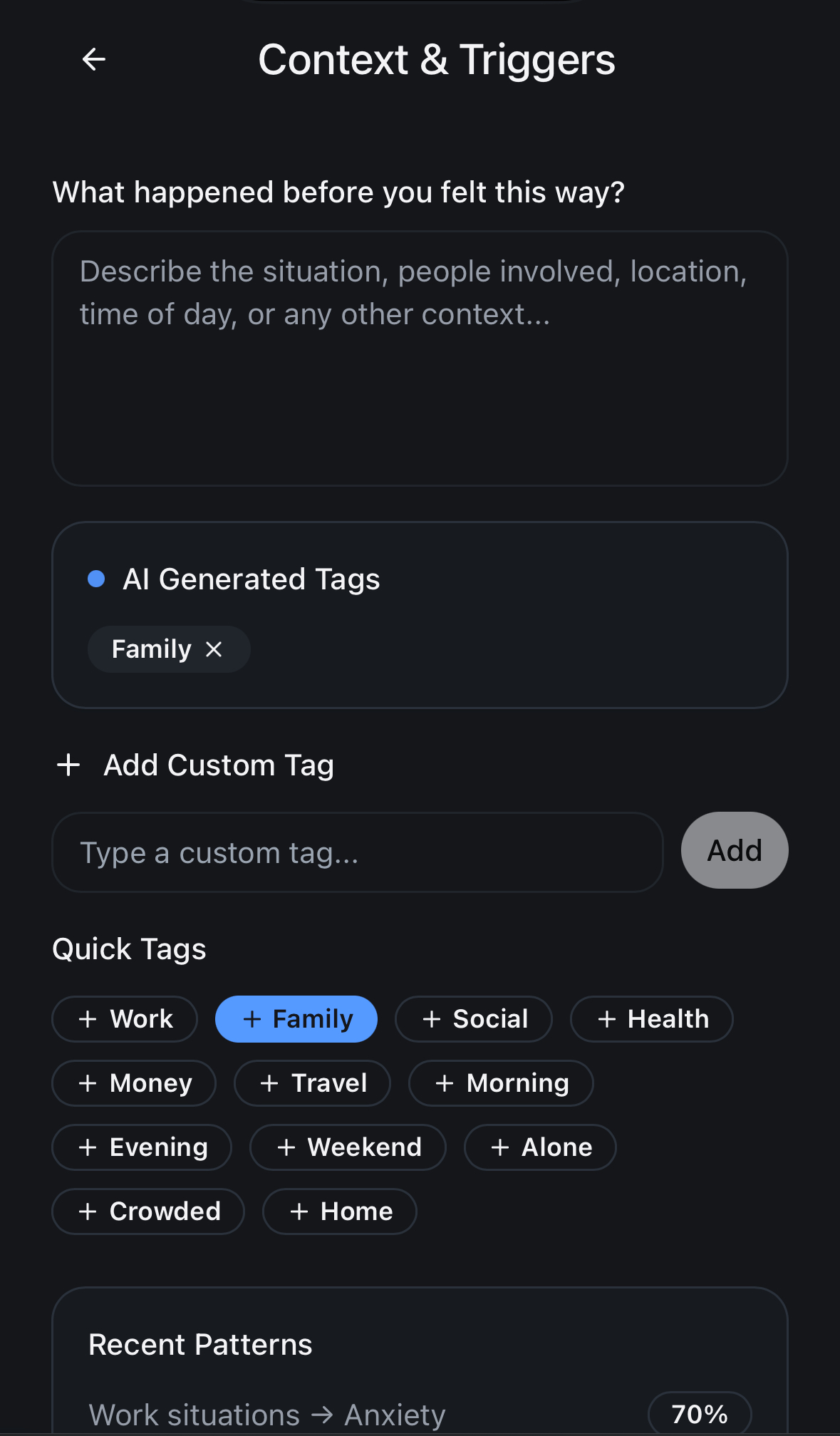

5. Trigger & Situation Logging Screen

- Users can add context for each logged emotion:

- What happened just before?

- Who was involved?

- What was the setting?

- AI processes the input text to:

- Identify keywords and generate tags (e.g. “conflict”, “deadline”, “family”, etc.).

- Display these tags as chips for future selection.

- Patterns become visible over time:

- See which situations or people tend to elicit specific emotions.

- Find recurring triggers or emotional loops.

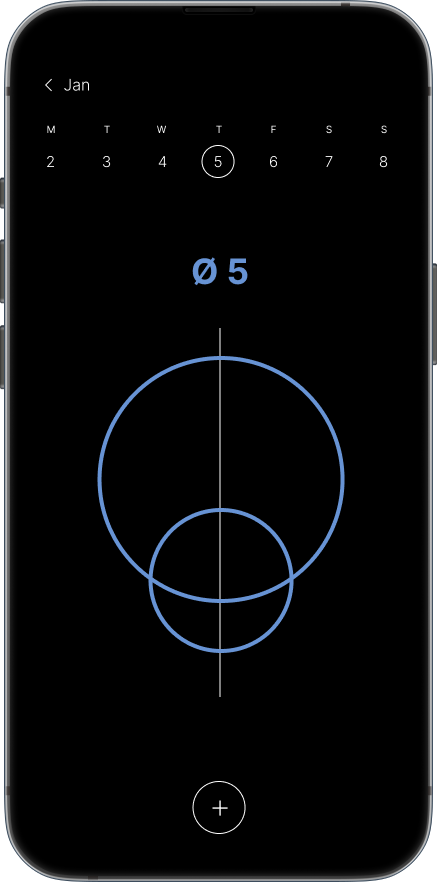

First round

Progress Examples

The first three images were generated using prompts that aimed to describe a more detailed and realistic figure. When those attempts didn’t yield the desired result, I provided a reference image and requested it to be implemented directly.

Learning

What Didn’t Work

- Vague prompts led to abstract, robotic shapes

- No image reference = AI misinterpretation

- Tool biased toward minimal, neutral figures

- Model lacked training on realistic silhouettes

What Worked

- Providing a clear reference image

- Direct implementation instead of prompting

How to Improve Prompting

- Be ultra-specific (anatomy, posture, style)

- Include visual references early

- State use case (e.g., emotion mapping app)

- Describe the desired visual style clearly (e.g., minimal, gender-neutral, human-like)

Design 2